Quickstart for the dbt Cloud Semantic Layer and Snowflake

Introduction

The dbt Semantic Layer, powered by MetricFlow, simplifies the setup of key business metrics. It centralizes definitions, avoids duplicate code, and ensures easy access to metrics in downstream tools. MetricFlow helps manage company metrics easier, allowing you to define metrics in your dbt project and query them in dbt Cloud with MetricFlow commands.

📹 Learn about the dbt Semantic Layer with on-demand video courses!

Explore our dbt Semantic Layer on-demand course to learn how to define and query metrics in your dbt project.

Additionally, dive into mini-courses for querying the dbt Semantic Layer in your favorite tools: Tableau, Hex, and Mode.

This quickstart guide is designed for dbt Cloud users using Snowflake as their data platform. It focuses on building and defining metrics, setting up the dbt Semantic Layer in a dbt Cloud project, and querying metrics in Google Sheets.

For users on different data platforms

If you're using a data platform other than Snowflake, this guide is also applicable to you. You can adapt the setup for your specific platform by following the account setup and data loading instructions detailed in the following tabs for each respective platform.

The rest of this guide applies universally across all supported platforms, ensuring you can fully leverage the dbt Semantic Layer.

- BigQuery

- Databricks

- Microsoft Fabric

- Redshift

- Starburst Galaxy

Open a new tab and follow these quick steps for account setup and data loading instructions:

Open a new tab and follow these quick steps for account setup and data loading instructions:

Open a new tab and follow these quick steps for account setup and data loading instructions:

Open a new tab and follow these quick steps for account setup and data loading instructions:

Open a new tab and follow these quick steps for account setup and data loading instructions:

Prerequisites

-

You need a dbt Cloud Trial, Team, or Enterprise account for all deployments. Contact your representative for Single-tenant setup; otherwise, create an account using this guide.

-

Have the correct dbt Cloud license and permissions based on your plan:

More info on license and permissions -

Production and development environments must be on dbt version 1.6 or higher. Alternatively, set your environment to Versionless to always get the latest updates.

-

Create a trial Snowflake account:

- Select the Enterprise Snowflake edition with ACCOUNTADMIN access. Consider organizational questions when choosing a cloud provider, refer to Snowflake's Introduction to Cloud Platforms.

- Select a cloud provider and region. All cloud providers and regions will work so choose whichever you prefer.

-

Basic understanding of SQL and dbt. For example, you've used dbt before or have completed the dbt Fundamentals course.

What you'll learn

This guide will cover the following topics:

- Create a new Snowflake worksheet and set up your environment

- Load sample data into your Snowflake account

- Connect dbt Cloud to Snowflake

- Set up a dbt Cloud managed repository

- Initialize a dbt Cloud project and start developing

- Build your dbt Cloud project

- Create a semantic model in dbt Cloud

- Define metrics in dbt Cloud

- Add second semantic model

- Test and query metrics in dbt Cloud

- Run a production job in dbt Cloud

- Set up dbt Semantic Layer in dbt Cloud

- Connect and query metrics with Google Sheets

Create new Snowflake worksheet and set up environment

- Log in to your trial Snowflake account.

- In the Snowflake user interface (UI), click + Worksheet in the upper right corner.

- Select SQL Worksheet to create a new worksheet.

Set up Snowflake environment

The data used here is stored as CSV files in a public S3 bucket and the following steps will guide you through how to prepare your Snowflake account for that data and upload it.

Create a new virtual warehouse, two new databases (one for raw data, the other for future dbt development), and two new schemas (one for jaffle_shop data, the other for stripe data).

-

Run the following SQL commands one by one by typing them into the Editor of your new Snowflake SQL worksheet to set up your environment.

-

Click Run in the upper right corner of the UI for each one:

-- Create a virtual warehouse named 'transforming'

create warehouse transforming;

-- Create two databases: one for raw data and another for analytics

create database raw;

create database analytics;

-- Within the 'raw' database, create two schemas: 'jaffle_shop' and 'stripe'

create schema raw.jaffle_shop;

create schema raw.stripe;

Load data into Snowflake

Now that your environment is set up, you can start loading data into it. You will be working within the raw database, using the jaffle_shop and stripe schemas to organize your tables.

- Create customer table. First, delete all contents (empty) in the Editor of the Snowflake worksheet. Then, run this SQL command to create the customer table in the

jaffle_shopschema:

create table raw.jaffle_shop.customers

( id integer,

first_name varchar,

last_name varchar

);

You should see a ‘Table CUSTOMERS successfully created.’ message.

- Load data. After creating the table, delete all contents in the Editor. Run this command to load data from the S3 bucket into the customer table:

copy into raw.jaffle_shop.customers (id, first_name, last_name)

from 's3://dbt-tutorial-public/jaffle_shop_customers.csv'

file_format = (

type = 'CSV'

field_delimiter = ','

skip_header = 1

);

You should see a confirmation message after running the command.

- Create

orderstable. Delete all contents in the Editor. Run the following command to create…

create table raw.jaffle_shop.orders

( id integer,

user_id integer,

order_date date,

status varchar,

_etl_loaded_at timestamp default current_timestamp

);

You should see a confirmation message after running the command.

- Load data. Delete all contents in the Editor, then run this command to load data into the orders table:

copy into raw.jaffle_shop.orders (id, user_id, order_date, status)

from 's3://dbt-tutorial-public/jaffle_shop_orders.csv'

file_format = (

type = 'CSV'

field_delimiter = ','

skip_header = 1

);

You should see a confirmation message after running the command.

- Create

paymenttable. Delete all contents in the Editor. Run the following command to create the payment table:

create table raw.stripe.payment

( id integer,

orderid integer,

paymentmethod varchar,

status varchar,

amount integer,

created date,

_batched_at timestamp default current_timestamp

);

You should see a confirmation message after running the command.

- Load data. Delete all contents in the Editor. Run the following command to load data into the payment table:

copy into raw.stripe.payment (id, orderid, paymentmethod, status, amount, created)

from 's3://dbt-tutorial-public/stripe_payments.csv'

file_format = (

type = 'CSV'

field_delimiter = ','

skip_header = 1

);

You should see a confirmation message after running the command.

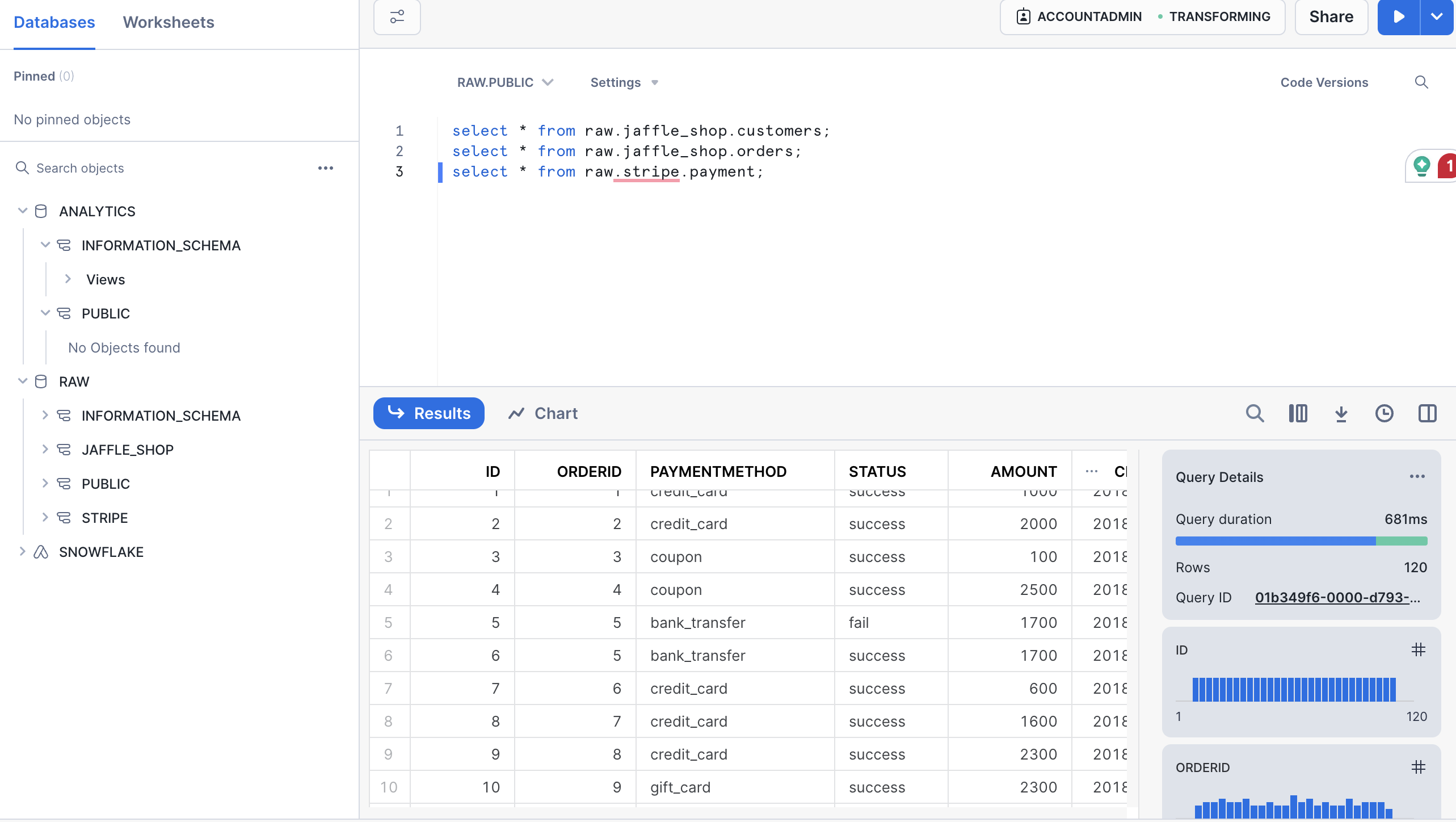

- Verify data. Verify that the data is loaded by running these SQL queries. Confirm that you can see output for each one, like the following confirmation image.

select * from raw.jaffle_shop.customers;

select * from raw.jaffle_shop.orders;

select * from raw.stripe.payment;

Connect dbt Cloud to Snowflake

There are two ways to connect dbt Cloud to Snowflake. The first option is Partner Connect, which provides a streamlined setup to create your dbt Cloud account from within your new Snowflake trial account. The second option is to create your dbt Cloud account separately and build the Snowflake connection yourself (connect manually). If you want to get started quickly, dbt Labs recommends using Partner Connect. If you want to customize your setup from the very beginning and gain familiarity with the dbt Cloud setup flow, dbt Labs recommends connecting manually.

- Use Partner Connect

- Connect manually

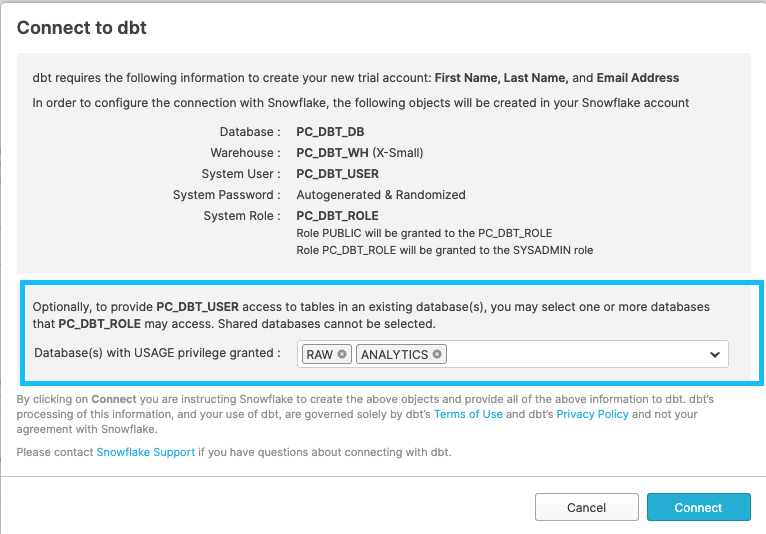

Using Partner Connect allows you to create a complete dbt account with your Snowflake connection, a managed repository, environments, and credentials.

-

In the Snowflake UI, click on the home icon in the upper left corner. In the left sidebar, select Data Products. Then, select Partner Connect. Find the dbt tile by scrolling or by searching for dbt in the search bar. Click the tile to connect to dbt.

If you’re using the classic version of the Snowflake UI, you can click the Partner Connect button in the top bar of your account. From there, click on the dbt tile to open up the connect box.

-

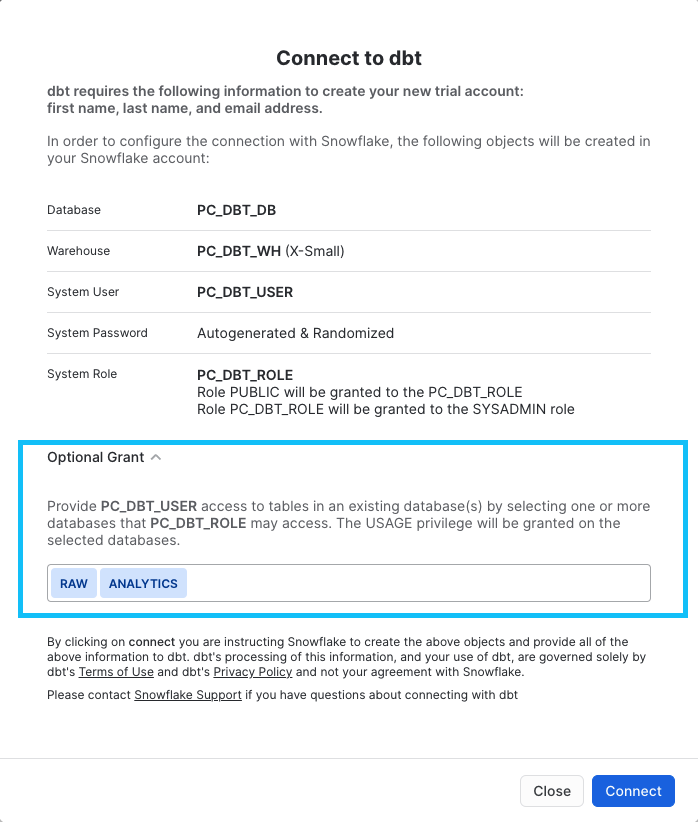

In the Connect to dbt popup, find the Optional Grant option and select the RAW and ANALYTICS databases. This will grant access for your new dbt user role to each selected database. Then, click Connect.

-

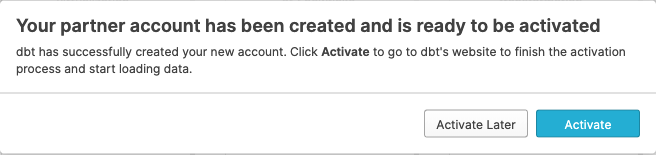

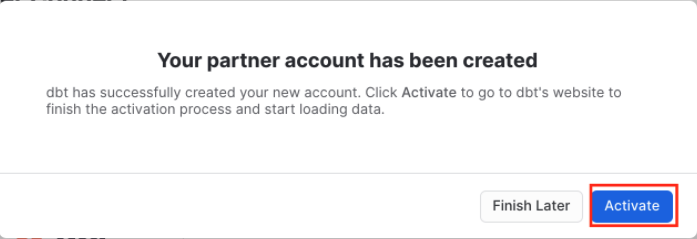

Click Activate when a popup appears:

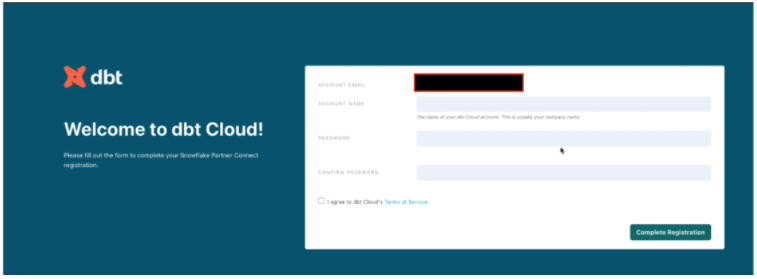

- After the new tab loads, you will see a form. If you already created a dbt Cloud account, you will be asked to provide an account name. If you haven't created an account, you will be asked to provide an account name and password.

-

After you have filled out the form and clicked Complete Registration, you will be logged into dbt Cloud automatically.

-

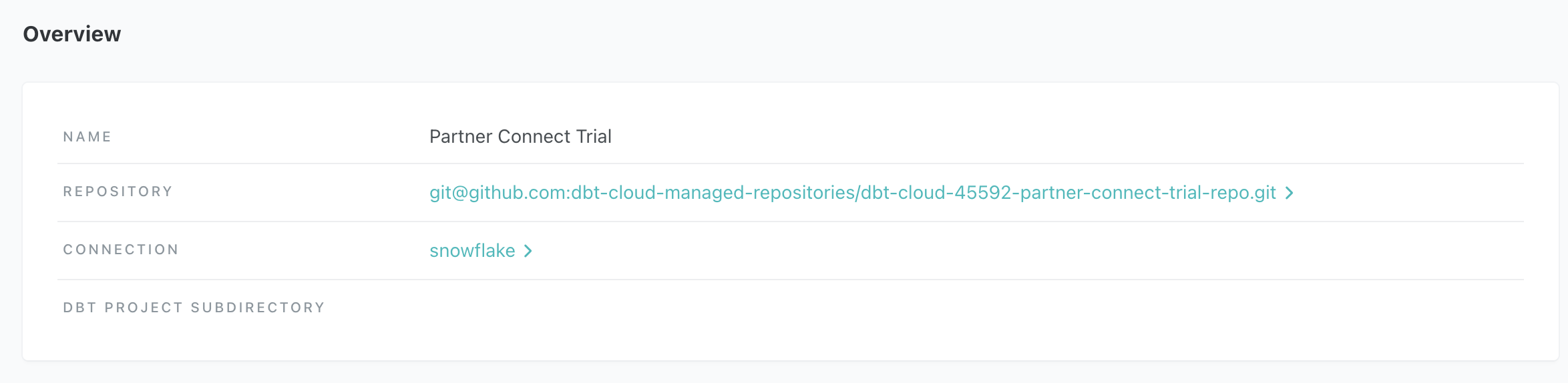

From your Account Settings in dbt Cloud (using the gear menu in the upper right corner), choose the "Partner Connect Trial" project and select snowflake in the overview table. Select Edit and update the Database field to

analyticsand the Warehouse field totransforming.

-

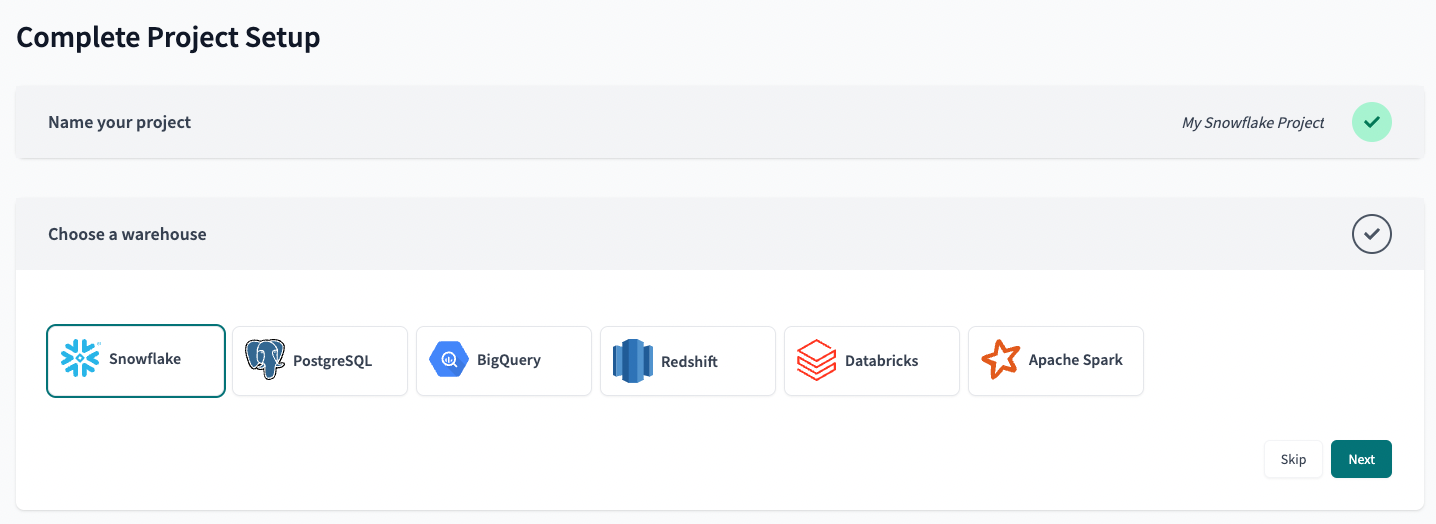

Create a new project in dbt Cloud. From Account settings (using the gear menu in the top right corner), click + New Project.

-

Enter a project name and click Continue.

-

For the warehouse, click Snowflake then Next to set up your connection.

-

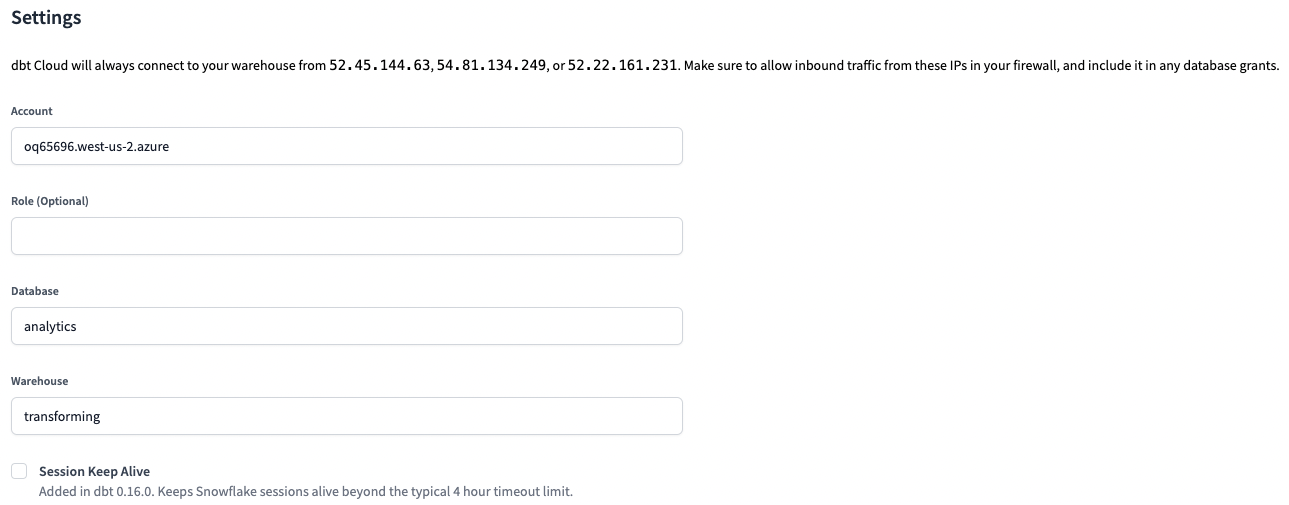

Enter your Settings for Snowflake with:

-

Account — Find your account by using the Snowflake trial account URL and removing

snowflakecomputing.com. The order of your account information will vary by Snowflake version. For example, Snowflake's Classic console URL might look like:oq65696.west-us-2.azure.snowflakecomputing.com. The AppUI or Snowsight URL might look more like:snowflakecomputing.com/west-us-2.azure/oq65696. In both examples, your account will be:oq65696.west-us-2.azure. For more information, see Account Identifiers in the Snowflake docs.✅ db5261993ordb5261993.east-us-2.azure

❌ db5261993.eu-central-1.snowflakecomputing.com -

Role — Leave blank for now. You can update this to a default Snowflake role later.

-

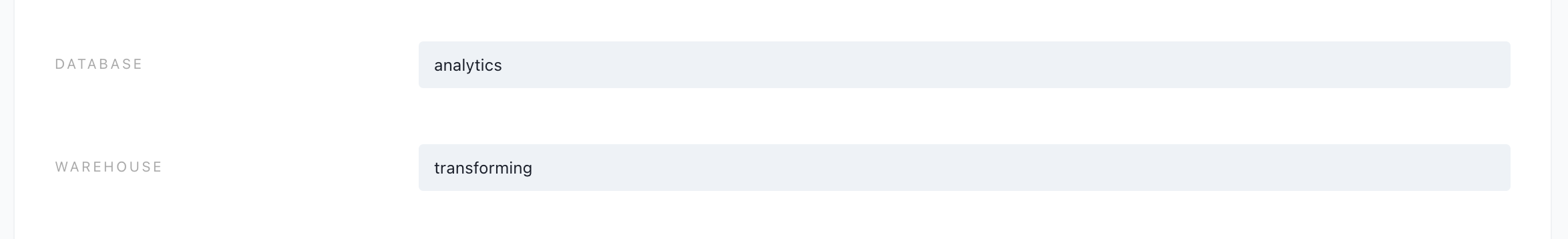

Database —

analytics. This tells dbt to create new models in the analytics database. -

Warehouse —

transforming. This tells dbt to use the transforming warehouse that was created earlier.

-

-

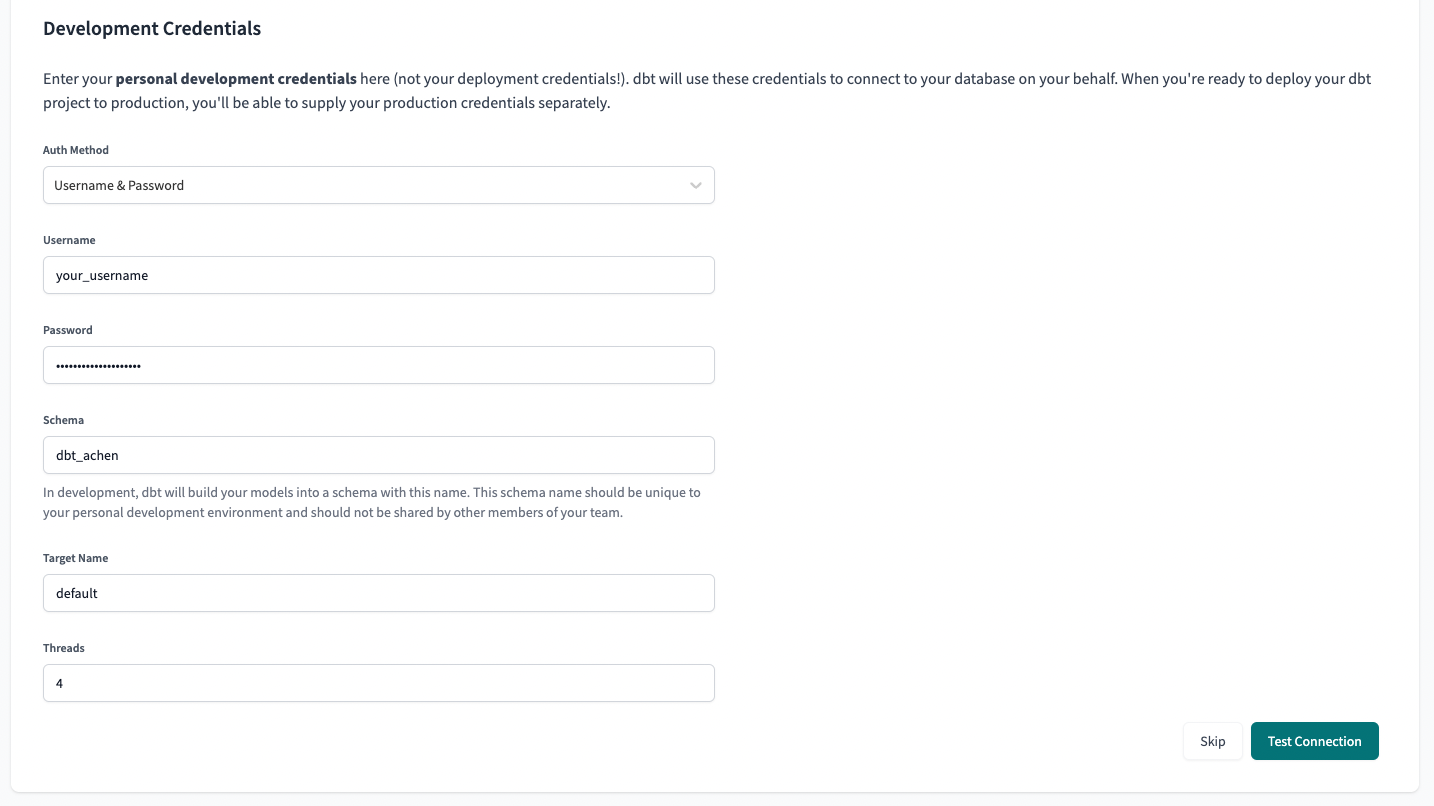

Enter your Development Credentials for Snowflake with:

- Username — The username you created for Snowflake. The username is not your email address and is usually your first and last name together in one word.

- Password — The password you set when creating your Snowflake account.

- Schema — You’ll notice that the schema name has been auto-created for you. By convention, this is

dbt_<first-initial><last-name>. This is the schema connected directly to your development environment, and it's where your models will be built when running dbt within the Cloud IDE. - Target name — Leave as the default.

- Threads — Leave as 4. This is the number of simultaneous connects that dbt Cloud will make to build models concurrently.

-

Click Test Connection. This verifies that dbt Cloud can access your Snowflake account.

-

If the connection test succeeds, click Next. If it fails, you may need to check your Snowflake settings and credentials.

Set up a dbt Cloud managed repository

If you used Partner Connect, you can skip to initializing your dbt project as Partner Connect provides you with a managed repository. Otherwise, you will need to create your repository connection.

When you develop in dbt Cloud, you can leverage Git to version control your code.

To connect to a repository, you can either set up a dbt Cloud-hosted managed repository or directly connect to a supported git provider. Managed repositories are a great way to trial dbt without needing to create a new repository. In the long run, it's better to connect to a supported git provider to use features like automation and continuous integration.

To set up a managed repository:

- Under "Setup a repository", select Managed.

- Type a name for your repo such as

bbaggins-dbt-quickstart - Click Create. It will take a few seconds for your repository to be created and imported.

- Once you see the "Successfully imported repository," click Continue.

Initialize your dbt project and start developing

This guide assumes you use the dbt Cloud IDE to develop your dbt project and define metrics. However, the dbt Cloud IDE doesn't support using MetricFlow commands to query or preview metrics (support coming soon).

To query and preview metrics in your development tool, you can use the dbt Cloud CLI to run the MetricFlow commands.

Now that you have a repository configured, you can initialize your project and start development in dbt Cloud using the IDE:

- Click Start developing in the dbt Cloud IDE. It might take a few minutes for your project to spin up for the first time as it establishes your git connection, clones your repo, and tests the connection to the warehouse.

- Above the file tree to the left, click Initialize your project. This builds out your folder structure with example models.

- Make your initial commit by clicking Commit and sync. Use the commit message

initial commit. This creates the first commit to your managed repo and allows you to open a branch where you can add a new dbt code. - You can now directly query data from your warehouse and execute

dbt run. You can try this out now:- Delete the models/examples folder in the File Explorer.

- Click + Create new file, add this query to the new file, and click Save as to save the new file:

select * from raw.jaffle_shop.customers - In the command line bar at the bottom, enter dbt run and click Enter. You should see a dbt run succeeded message.

Build your dbt project

The next step is to build your project. This involves adding sources, staging models, business-defined entities, and packages to your project.

Add sources

Sources in dbt are the raw data tables you'll transform. By organizing your source definitions, you document the origin of your data. It also makes your project and transformation more reliable, structured, and understandable.

You have two options for working with files in the dbt Cloud IDE:

- Create a new branch (recommended) — Create a new branch to edit and commit your changes. Navigate to Version Control on the left sidebar and click Create branch.

- Edit in the protected primary branch — If you prefer to edit, format, or lint files and execute dbt commands directly in your primary git branch, use this option. The dbt Cloud IDE prevents commits to the protected branch so you'll be prompted to commit your changes to a new branch.

Name the new branch build-project.

- Hover over the

modelsdirectory and click the three-dot menu (...), then select Create file. - Name the file

staging/jaffle_shop/src_jaffle_shop.yml, then click Create. - Copy the following text into the file and click Save.

version: 2

sources:

- name: jaffle_shop

database: raw

schema: jaffle_shop

tables:

- name: customers

- name: orders

In your source file, you can also use the Generate model button to create a new model file for each source. This creates a new file in the models directory with the given source name and fill in the SQL code of the source definition.

- Hover over the

modelsdirectory and click the three dot menu (...), then select Create file. - Name the file

staging/stripe/src_stripe.yml, then click Create. - Copy the following text into the file and click Save.

version: 2

sources:

- name: stripe

database: raw

schema: stripe

tables:

- name: payment

Add staging models

Staging models are the first transformation step in dbt. They clean and prepare your raw data, making it ready for more complex transformations and analyses. Follow these steps to add your staging models to your project.

- In the

jaffle_shopsub-directory, create the filestg_customers.sql. Or, you can use the Generate model button to create a new model file for each source. - Copy the following query into the file and click Save.

select

id as customer_id,

first_name,

last_name

from {{ source('jaffle_shop', 'customers') }}

- In the same

jaffle_shopsub-directory, create the filestg_orders.sql - Copy the following query into the file and click Save.

select

id as order_id,

user_id as customer_id,

order_date,

status

from {{ source('jaffle_shop', 'orders') }}

- In the

stripesub-directory, create the filestg_payments.sql. - Copy the following query into the file and click Save.

select

id as payment_id,

orderid as order_id,

paymentmethod as payment_method,

status,

-- amount is stored in cents, convert it to dollars

amount / 100 as amount,

created as created_at

from {{ source('stripe', 'payment') }}

- Enter

dbt runin the command prompt at the bottom of the screen. You should get a successful run and see the three models.

Add business-defined entities

This phase involves creating models that serve as the entity layer or concept layer of your dbt project, making the data ready for reporting and analysis. It also includes adding packages and the MetricFlow time spine that extend dbt's functionality.

This phase is the marts layer, which brings together modular pieces into a wide, rich vision of the entities an organization cares about.

- Create the file

models/marts/fct_orders.sql. - Copy the following query into the file and click Save.

with orders as (

select * from {{ ref('stg_orders' )}}

),

payments as (

select * from {{ ref('stg_payments') }}

),

order_payments as (

select

order_id,

sum(case when status = 'success' then amount end) as amount

from payments

group by 1

),

final as (

select

orders.order_id,

orders.customer_id,

orders.order_date,

coalesce(order_payments.amount, 0) as amount

from orders

left join order_payments using (order_id)

)

select * from final

- In the

models/martsdirectory, create the filedim_customers.sql. - Copy the following query into the file and click Save.

with customers as (

select * from {{ ref('stg_customers')}}

),

orders as (

select * from {{ ref('fct_orders')}}

),

customer_orders as (

select

customer_id,

min(order_date) as first_order_date,

max(order_date) as most_recent_order_date,

count(order_id) as number_of_orders,

sum(amount) as lifetime_value

from orders

group by 1

),

final as (

select

customers.customer_id,

customers.first_name,

customers.last_name,

customer_orders.first_order_date,

customer_orders.most_recent_order_date,

coalesce(customer_orders.number_of_orders, 0) as number_of_orders,

customer_orders.lifetime_value

from customers

left join customer_orders using (customer_id)

)

select * from final

- In your main directory, create the file

packages.yml. - Copy the following text into the file and click Save.

packages:

- package: dbt-labs/dbt_utils

version: 1.1.1

- In the

modelsdirectory, create the filemetrics/metricflow_time_spine.sqlin your main directory. - Copy the following query into the file and click Save.

{{

config(

materialized = 'table',

)

}}

with days as (

{{

dbt_utils.date_spine(

'day',

"to_date('01/01/2000','mm/dd/yyyy')",

"to_date('01/01/2027','mm/dd/yyyy')"

)

}}

),

final as (

select cast(date_day as date) as date_day

from days

)

select * from final

- Enter

dbt runin the command prompt at the bottom of the screen. You should get a successful run message and also see in the run details that dbt has successfully built five models.

Create semantic models

Semantic models contain many object types (such as entities, measures, and dimensions) that allow MetricFlow to construct the queries for metric definitions.

- Each semantic model will be 1:1 with a dbt SQL/Python model.

- Each semantic model will contain (at most) 1 primary or natural entity.

- Each semantic model will contain zero, one, or many foreign or unique entities used to connect to other entities.

- Each semantic model may also contain dimensions, measures, and metrics. This is what actually gets fed into and queried by your downstream BI tool.

In the following steps, semantic models enable you to define how to interpret the data related to orders. It includes entities (like ID columns serving as keys for joining data), dimensions (for grouping or filtering data), and measures (for data aggregations).

- In the

metricssub-directory, create a new filefct_orders.yml. - Add the following code to that newly created file:

semantic_models:

- name: orders

defaults:

agg_time_dimension: order_date

description: |

Order fact table. This table’s grain is one row per order.

model: ref('fct_orders')

The following sections explain dimensions, entities, and measures in more detail, showing how they each play a role in semantic models.

- Entities act as unique identifiers (like ID columns) that link data together from different tables.

- Dimensions categorize and filter data, making it easier to organize.

- Measures calculates data, providing valuable insights through aggregation.

Entities

Entities are a real-world concept in a business, serving as the backbone of your semantic model. These are going to be ID columns (like order_id) in our semantic models. These will serve as join keys to other semantic models.

Add entities to your fct_orders.yml semantic model file:

semantic_models:

- name: orders

defaults:

agg_time_dimension: order_date

description: |

Order fact table. This table’s grain is one row per order.

model: ref('fct_orders')

# Newly added

entities:

- name: order_id

type: primary

- name: customer_id

type: foreign

Dimensions

Dimensions are a way to group or filter information based on categories or time.

Add dimensions to your fct_orders.yml semantic model file:

semantic_models:

- name: orders

defaults:

agg_time_dimension: order_date

description: |

Order fact table. This table’s grain is one row per order.

model: ref('fct_orders')

entities:

- name: order_id

type: primary

- name: customer_id

type: foreign

# Newly added

dimensions:

- name: order_date

type: time

type_params:

time_granularity: day

Measures

Measures are aggregations performed on columns in your model. Often, you’ll find yourself using them as final metrics themselves. Measures can also serve as building blocks for more complicated metrics.

Add measures to your fct_orders.yml semantic model file:

semantic_models:

- name: orders

defaults:

agg_time_dimension: order_date

description: |

Order fact table. This table’s grain is one row per order.

model: ref('fct_orders')

entities:

- name: order_id

type: primary

- name: customer_id

type: foreign

dimensions:

- name: order_date

type: time

type_params:

time_granularity: day

# Newly added

measures:

- name: order_total

description: The total amount for each order including taxes.

agg: sum

expr: amount

- name: order_count

expr: 1

agg: sum

- name: customers_with_orders

description: Distinct count of customers placing orders

agg: count_distinct

expr: customer_id

- name: order_value_p99 ## The 99th percentile order value

expr: amount

agg: percentile

agg_params:

percentile: 0.99

use_discrete_percentile: True

use_approximate_percentile: False

Define metrics

Metrics are the language your business users speak and measure business performance. They are an aggregation over a column in your warehouse that you enrich with dimensional cuts.

There are different types of metrics you can configure:

- Conversion metrics — Track when a base event and a subsequent conversion event occur for an entity within a set time period.

- Cumulative metrics — Aggregate a measure over a given window. If no window is specified, the window will accumulate the measure over all of the recorded time period. Note that you must create the time spine model before you add cumulative metrics.

- Derived metrics — Allows you to do calculations on top of metrics.

- Simple metrics — Directly reference a single measure without any additional measures involved.

- Ratio metrics — Involve a numerator metric and a denominator metric. A constraint string can be applied to both the numerator and denominator or separately to the numerator or denominator.

Once you've created your semantic models, it's time to start referencing those measures you made to create some metrics:

Add metrics to your fct_orders.yml semantic model file:

semantic_models:

- name: orders

defaults:

agg_time_dimension: order_date

description: |

Order fact table. This table’s grain is one row per order

model: ref('fct_orders')

entities:

- name: order_id

type: primary

- name: customer_id

type: foreign

dimensions:

- name: order_date

type: time

type_params:

time_granularity: day

measures:

- name: order_total

description: The total amount for each order including taxes.

agg: sum

expr: amount

- name: order_count

expr: 1

agg: sum

- name: customers_with_orders

description: Distinct count of customers placing orders

agg: count_distinct

expr: customer_id

- name: order_value_p99

expr: amount

agg: percentile

agg_params:

percentile: 0.99

use_discrete_percentile: True

use_approximate_percentile: False

# Newly added

metrics:

# Simple type metrics

- name: "order_total"

description: "Sum of orders value"

type: simple

label: "order_total"

type_params:

measure: order_total

- name: "order_count"

description: "number of orders"

type: simple

label: "order_count"

type_params:

measure: order_count

- name: large_orders

description: "Count of orders with order total over 20."

type: simple

label: "Large Orders"

type_params:

measure: order_count

filter: |

{{ Dimension('order_id__order_total_dim') }} >= 20

# Ratio type metric

- name: "avg_order_value"

label: "avg_order_value"

description: "average value of each order"

type: ratio

type_params:

numerator: order_total

denominator: order_count

# Cumulative type metrics

- name: "cumulative_order_amount_mtd"

label: "cumulative_order_amount_mtd"

description: "The month to date value of all orders"

type: cumulative

type_params:

measure: order_total

grain_to_date: month

# Derived metric

- name: "pct_of_orders_that_are_large"

label: "pct_of_orders_that_are_large"

description: "percent of orders that are large"

type: derived

type_params:

expr: large_orders/order_count

metrics:

- name: large_orders

- name: order_count

Add second semantic model to your project

Great job, you've successfully built your first semantic model! It has all the required elements: entities, dimensions, measures, and metrics.

Let’s expand your project's analytical capabilities by adding another semantic model in your other marts model, such as: dim_customers.yml.

After setting up your orders model:

- In the

metricssub-directory, create the filedim_customers.yml. - Copy the following query into the file and click Save.

semantic_models:

- name: customers

defaults:

agg_time_dimension: most_recent_order_date

description: |

semantic model for dim_customers

model: ref('dim_customers')

entities:

- name: customer

expr: customer_id

type: primary

dimensions:

- name: customer_name

type: categorical

expr: first_name

- name: first_order_date

type: time

type_params:

time_granularity: day

- name: most_recent_order_date

type: time

type_params:

time_granularity: day

measures:

- name: count_lifetime_orders

description: Total count of orders per customer.

agg: sum

expr: number_of_orders

- name: lifetime_spend

agg: sum

expr: lifetime_value

description: Gross customer lifetime spend inclusive of taxes.

- name: customers

expr: customer_id

agg: count_distinct

metrics:

- name: "customers_with_orders"

label: "customers_with_orders"

description: "Unique count of customers placing orders"

type: simple

type_params:

measure: customers

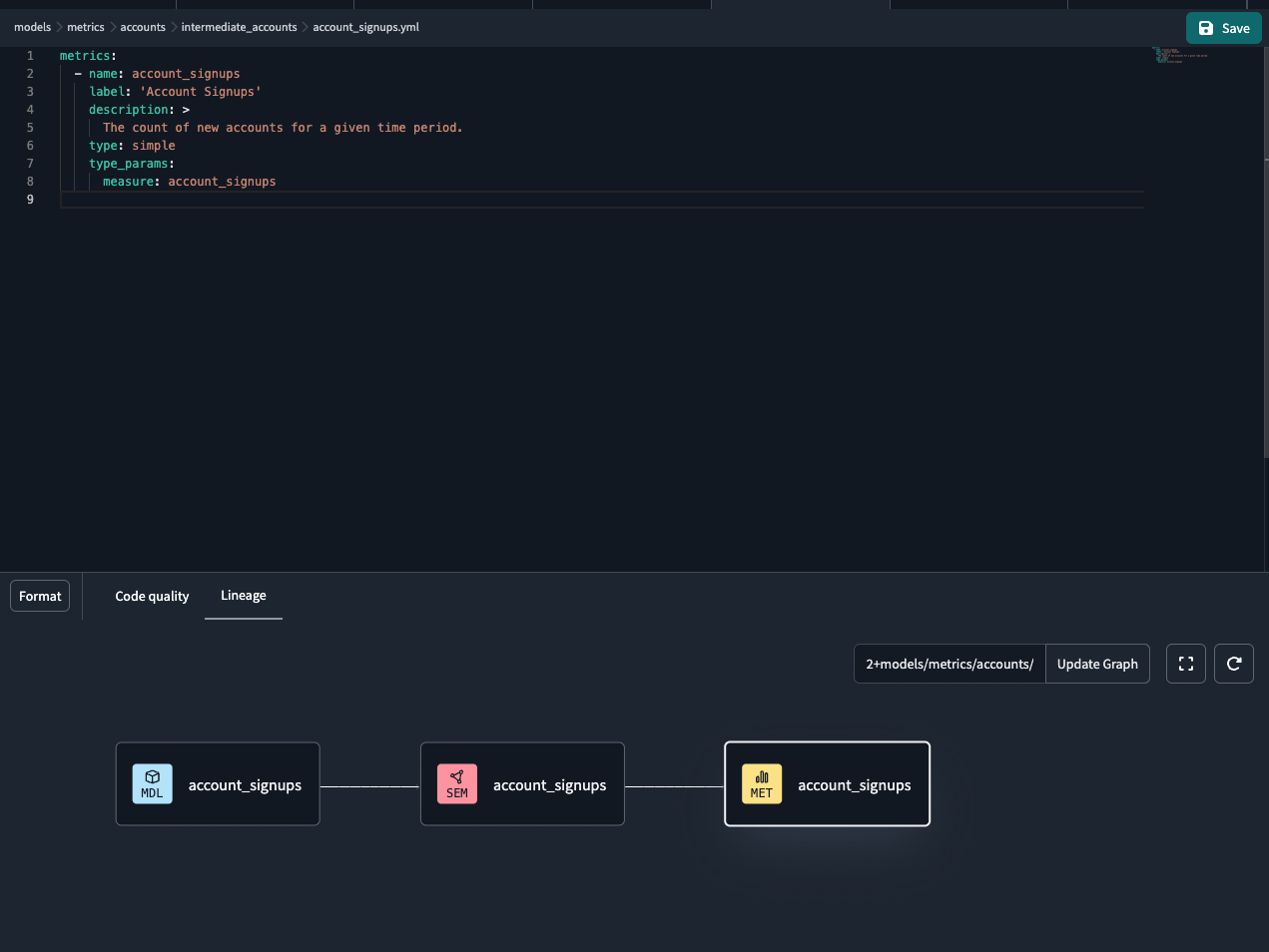

This semantic model uses simple metrics to focus on customer metrics and emphasizes customer dimensions like name, type, and order dates. It uniquely analyzes customer behavior, lifetime value, and order patterns.

Test and query metrics

To work with metrics in dbt, you have several tools to validate or run commands. Here's how you can test and query metrics depending on your setup:

- dbt Cloud IDE users — Currently, running MetricFlow commands directly in the dbt Cloud IDE isn't supported, but is coming soon. You can view metrics visually through the DAG in the Lineage tab without directly running commands.

- dbt Cloud CLI users — The dbt Cloud CLI enables you to run MetricFlow commands to query and preview metrics directly in your command line interface.

- dbt Core users — Use the MetricFlow CLI for command execution. While this guide focuses on dbt Cloud users, dbt Core users can find detailed MetricFlow CLI setup instructions in the MetricFlow commands page. Note that to use the dbt Semantic Layer, you need to have a Team or Enterprise account.

Alternatively, you can run commands with SQL client tools like DataGrip, DBeaver, or RazorSQL.

dbt Cloud IDE users

You can view your metrics in the dbt Cloud IDE by viewing them in the Lineage tab. The dbt Cloud IDE Status button (located in the bottom right of the editor) displays an Error status if there's an error in your metric or semantic model definition. You can click the button to see the specific issue and resolve it.

Once viewed, make sure you commit and merge your changes in your project.

dbt Cloud CLI users

This section is for dbt Cloud CLI users. MetricFlow commands are integrated with dbt Cloud, which means you can run MetricFlow commands as soon as you install the dbt Cloud CLI. Your account will automatically manage version control for you.

Refer to the following steps to get started:

- Install the dbt Cloud CLI (if you haven't already). Then, navigate to your dbt project directory.

- Run a dbt command, such as

dbt parse,dbt run,dbt compile, ordbt build. If you don't, you'll receive an error message that begins with: "ensure that you've ran an artifacts....". - MetricFlow builds a semantic graph and generates a

semantic_manifest.jsonfile in dbt Cloud, which is stored in the/targetdirectory. If using the Jaffle Shop example, rundbt seed && dbt runto ensure the required data is in your data platform before proceeding.

When you make changes to metrics, make sure to run dbt parse at a minimum to update the dbt Semantic Layer. This updates the semantic_manifest.json file, reflecting your changes when querying metrics. By running dbt parse, you won't need to rebuild all the models.

-

Run

dbt sl --helpto confirm you have MetricFlow installed and that you can view the available commands. -

Run

dbt sl query --metrics <metric_name> --group-by <dimension_name>to query the metrics and dimensions. For example, to query theorder_totalandorder_count(both metrics), and then group them by theorder_date(dimension), you would run:dbt sl query --metrics order_total,order_count --group-by order_date -

Verify that the metric values are what you expect. To further understand how the metric is being generated, you can view the generated SQL if you type

--compilein the command line. -

Commit and merge the code changes that contain the metric definitions.

Run a production job

Once you’ve committed and merged your metric changes in your dbt project, you can perform a job run in your deployment environment in dbt Cloud to materialize your metrics. The deployment environment is only supported for the dbt Semantic Layer currently.

- In dbt Cloud, create a new deployment environment or use an existing environment on dbt 1.6 or higher.

- Note — Deployment environment is currently supported (development experience coming soon)

- To create a new environment, navigate to Deploy in the navigation menu, select Environments, and then select Create new environment.

- Fill in your deployment credentials with your Snowflake username and password. You can name the schema anything you want. Click Save to create your new production environment.

- Create a new deploy job that runs in the environment you just created. Go back to the Deploy menu, select Jobs, select Create job, and click Deploy job.

- Set the job to run a

dbt buildand select the Generate docs on run checkbox. - Run the job and make sure it runs successfully.

What’s happening internally?

- Merging the code into your main branch allows dbt Cloud to pull those changes and build the definition in the manifest produced by the run.

- Re-running the job in the deployment environment helps materialize the models, which the metrics depend on, in the data platform. It also makes sure that the manifest is up to date.

- The Semantic Layer APIs pull in the most recent manifest and enables your integration to extract metadata from it.

Set up dbt Semantic Layer

You must be part of the Owner group and have the correct license and permissions to set up the Semantic Layer at the environment and project level.

- Enterprise plan:

- Developer license with Account Admin permissions, or

- Owner with a Developer license, assigned Project Creator, Database Admin, or Admin permissions.

- Team plan: Owner with a Developer license.

- Free trial: You are on a free trial of the Team plan as an Owner, which means you have access to the dbt Semantic Layer.

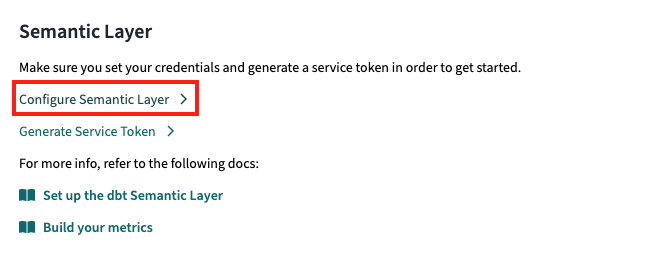

1. Select environment

Select the environment where you want to enable the Semantic Layer:

- Navigate to Account settings in the navigation menu.

- On the Settings left sidebar, select the specific project you want to enable the Semantic Layer for.

- In the Project details page, navigate to the Semantic Layer section. Select Configure Semantic Layer.

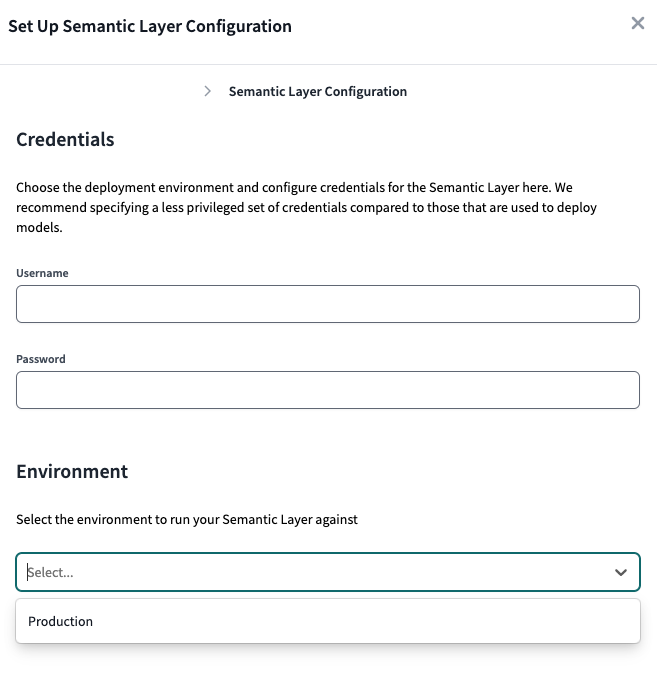

- In the Set Up Semantic Layer Configuration page, select the deployment environment you want for the Semantic Layer and click Save. This provides administrators with the flexibility to choose the environment where the Semantic Layer will be enabled.

dbt Cloud Enterprise plans can add multiple credentials and have a different set up. Skip to Add more credentials for more configuration details.

2. Add a credential and create service tokens

The dbt Semantic Layer uses service tokens for authentication which are tied to an underlying data platform credential that you configure. The credential configured is used to execute queries that the Semantic Layer issues against your data platform. This credential controls the physical access to underlying data accessed by the Semantic Layer, and all access policies set in the data platform for this credential will be respected.

dbt Cloud Enterprise plans can add multiple credentials and map those to service tokens. Refer to Add more credentials for more information.

- In the Set Up Semantic Layer Configuration page, enter the credentials specific to your data platform that you want the Semantic Layer to use.

- Use credentials with minimal privileges. The Semantic Layer requires read access to the schema(s) containing the dbt models used in your semantic models for downstream applications

- Note, environment variables such as

{{env_var('DBT_WAREHOUSE')}, aren't supported in the dbt Semantic Layer yet. You must use the actual credentials.

Enter the credentials specific to your data platform that you want the Semantic Layer to use and select the deployment environment.

Enter the credentials specific to your data platform that you want the Semantic Layer to use and select the deployment environment.- Create a Service Token after you add the credential.

- Enterprise plans: Name and generate a service token on the credential page directly.

- Team plans: You can return to the Project Details page and click the Generate a Service Token button.

- Name the token and save it. Once the token is generated, you won't be able to view this token again so make sure to record it somewhere safe.

Teams plans can create multiple service tokens that map to one underlying credential. Adding multiple credentials for tailored access is available for Enterprise plans.

Book a free live demo to discover the full potential of dbt Cloud Enterprise.

3. View connection detail

-

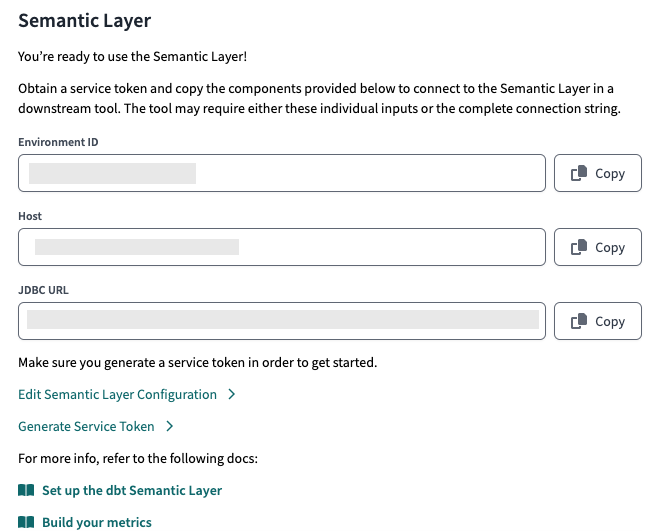

Go back to the Project details page for connection details to connect to downstream tools.

-

Copy and share the environment ID, service token, host, as well as the service token name to the relevant teams for BI connection set up. If your tool uses the GraphQL API, save the GraphQL API host information instead of the JDBC URL.

For info on how to connect to other integrations, refer to Available integrations.

After configuring, you'll be provided with the connection details to connect to you downstream tools.

After configuring, you'll be provided with the connection details to connect to you downstream tools.4. Add more credentials enterprise

dbt Cloud Enterprise plans can optionally add multiple credentials and map them to service tokens, offering more granular control and tailored access for different teams, which can then be shared to relevant teams for BI connection setup. These credentials control the physical access to underlying data accessed by the Semantic Layer.

We recommend configuring credentials and service tokens to reflect your teams and their roles. For example, create tokens or credentials that align with your team's needs, such as providing access to finance-related schemas to the Finance team.

Note that:

- Admins can link multiple service tokens to a single credential within a project, but each service token can only be linked to one credential per project.

- When you send a request through the APIs, the service token of the linked credential will follow access policies of the underlying view and tables used to build your semantic layer requests.

- Environment variables, like

{{env_var('DBT_WAREHOUSE')}aren't supported the dbt Semantic Layer yet. You must use the actual credentials instead.

To add multiple credentials and map them to service tokens:

- After configuring your environment, on the Credentials & service tokens page click the Add Semantic Layer credential button to configure the credential for your data platform.

- On the Create New Semantic Layer Credential page, you can create multiple credentials and map them to a service token.

- In the Add credentials section, fill in the data platform's credential fields. We recommend using “read-only” credentials.

-

In the Map new service token section, map a service token to the credential you configured in the previous step. dbt Cloud automatically selects the service token permission set you need (Semantic Layer Only and Metadata Only).

- To add another service token, click Add service token under the Linked service tokens section.

-

Click Save to link the service token to the credential. Remember to copy and save the service token securely, as it won't be viewable again after generation.

-

To delete a credential, go back to the Semantic Layer & Credentials page. Select Delete credential to remove a credential and click Save.

When you delete a credential, any service tokens mapped to that credential in the project will no longer work and will break for any end users.

Additional configuration

The following are the additional flexible configurations for Semantic Layer credentials.

Unlink service tokens

- Unlink a service token from the credential by clicking Unlink under the Linked service tokens section. If you try to query the Semantic Layer with an unlinked credential, you'll experience an error in your BI tool because no valid token is mapped.

Manage from service token page

View credential from service token

- View your Semantic Layer credential directly by navigating to the API tokens and then Service tokens page.

- Select the service token to view the credential it's linked to. This is useful if you want to know which service tokens are mapped to credentials in your project.

Create a new service token

- From the Service tokens page, create a new service token and map it to the credential(s) (assuming the semantic layer permission exists). This is useful if you want to create a new service token and directly map it to a credential in your project.

- Make sure to select the correct permission set for the service token (Semantic Layer Only and Metadata Only).

Query the Semantic Layer

This page will guide you on how to connect and use the following integrations to query your metrics:

The dbt Semantic Layer enables you to connect and query your metric with various available tools like Google Sheets, Hex, Tableau, and more.

Query metrics using other tools such as first-class integrations, Semantic Layer APIs, and exports to expose tables of metrics and dimensions in your data platform and create a custom integration with tools like PowerBI.

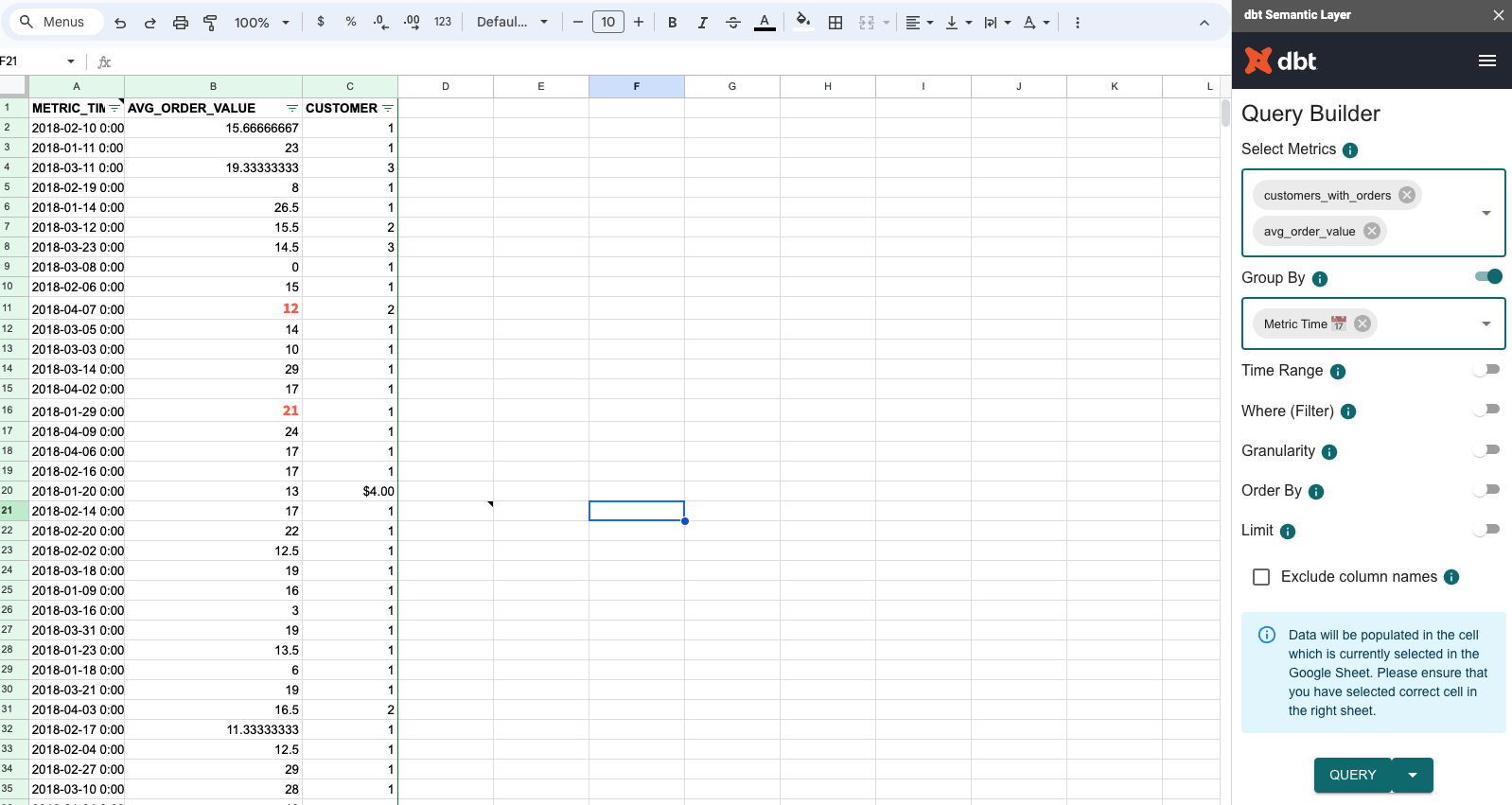

Connect and query with Google Sheets

The Google Sheets integration allows you to query your metrics using Google Sheets. This section will guide you on how to connect and use the Google Sheets integration.

To query your metrics using Google Sheets:

- Make sure you have a Gmail account.

- To set up Google Sheets and query your metrics, follow the detailed instructions on Google Sheets integration.

- Start exploring and querying metrics!

- Query a metric, like

order_total, and filter it with a dimension, likeorder_date. - You can also use the

group_byparameter to group your metrics by a specific dimension.

- Query a metric, like

Connect and query with Hex

This section will guide you on how to use the Hex integration to query your metrics using Hex. Select the appropriate tab based on your connection method:

- Query Semantic Layer with Hex

- Getting started with the Semantic Layer workshop

- Navigate to the Hex login page.

- Sign in or make an account (if you don’t already have one).

- You can make Hex free trial accounts with your work email or a .edu email.

- In the top left corner of your page, click on the HEX icon to go to the home page.

- Then, click the + New project button on the top right.

- Go to the menu on the left side and select Data browser. Then select Add a data connection.

- Click Snowflake. Provide your data connection a name and description. You don't need to your data warehouse credentials to use the Semantic Layer.

- Under Integrations, toggle the dbt switch to the right to enable the dbt integration.

- Enter the following information:

- Select your version of dbt as 1.6 or higher

- Enter your environment id

- Enter your service token

- Make sure to click on the Use Semantic Layer toggle. This way, all queries are routed through dbt.

- Click Create connection in the bottom right corner.

- Hover over More on the menu shown in the following image and select dbt Semantic Layer.

- Now, you should be able to query metrics using Hex! Try it yourself:

- Create a new cell and pick a metric.

- Filter it by one or more dimensions.

- Create a visualization.

- Click on the link provided to you in the workshop’s chat.

- Look at the Pinned message section of the chat if you don’t see it right away.

- Enter your email address in the textbox provided. Then, select SQL and Python to be taken to Hex’s home screen.

- Then click the purple Hex button in the top left corner.

- Click the Collections button on the menu on the left.

- Select the Semantic Layer Workshop collection.

- Click the Getting started with the dbt Semantic Layer project collection.

- To edit this Hex notebook, click the Duplicate button from the project dropdown menu (as displayed in the following image). This creates a new copy of the Hex notebook that you own.

- To make it easier to find, rename your copy of the Hex project to include your name.

-

Now, you should be able to query metrics using Hex! Try it yourself with the following example queries:

- In the first cell, you can see a table of the

order_totalmetric over time. Add theorder_countmetric to this table. - The second cell shows a line graph of the

order_totalmetric over time. Play around with the graph! Try changing the time grain using the Time unit drop-down menu. - The next table in the notebook, labeled “Example_query_2”, shows the number of customers who have made their first order on a given day. Create a new chart cell. Make a line graph of

first_ordered_atvscustomersto see how the number of new customers each day changes over time. - Create a new semantic layer cell and pick one or more metrics. Filter your metric(s) by one or more dimensions.

- In the first cell, you can see a table of the

What's next

Great job on completing the comprehensive dbt Semantic Layer guide 🎉! You should hopefully have gained a clear understanding of what the dbt Semantic Layer is, its purpose, and when to use it in your projects.

You've learned how to:

- Set up your Snowflake environment and dbt Cloud, including creating worksheets and loading data.

- Connect and configure dbt Cloud with Snowflake.

- Build, test, and manage dbt Cloud projects, focusing on metrics and semantic layers.

- Run production jobs and query metrics with our available integrations.

For next steps, you can start defining your own metrics and learn additional configuration options such as exports, fill null values, implementing dbt Mesh with the Semantic Layer, and more.

Here are some additional resources to help you continue your journey: